Multi Threading: Difference between revisions

m 1 revision imported |

|||

| (2 intermediate revisions by 2 users not shown) | |||

| Line 5: | Line 5: | ||

=== Benefits === | === Benefits === | ||

Multi-threaded code can offer some significant advantages to the | Multi-threaded code can offer some significant advantages to the programmer, not least in enabling simpler source code. It can be easier to decompose a problem into semi-independent units which operate in their own way in their own time than to try to do all the required jobs with a single slab of code. This is often because the <em>timing</em> of functions is unpredictable so writing them in a simple sequence may not match the most efficient order in which they <em>could</em> be scheduled. | ||

programmer, not least in enabling simpler source code. It can be | |||

easier to decompose a problem into semi-independent units which | |||

operate in their own way in their own time than to try to do all the | |||

required jobs with a single slab of code. This is often because the | |||

<em>timing</em> of functions is unpredictable so writing them in a simple | |||

sequence may not match the most efficient order in which they <em>could</em> | |||

be scheduled. | |||

Such <strong>parallel</strong> code, with unpredictable timing, is sometimes | Such <strong>parallel</strong> code, with unpredictable timing, is sometimes referred to as “<strong>asynchronous</strong>” code. Written as multiple threads, it becomes the thread [[scheduler]]’s job to keep the processor(s) busy. | ||

referred to as “<strong>asynchronous</strong>” code. Written as | |||

multiple threads, it becomes the thread [[scheduler]]’s job | |||

to keep the processor(s) busy. | |||

<blockquote> | <blockquote> | ||

<strong>Example</strong>: consider a web-server which may take variable times to | <strong>Example</strong>: consider a web-server which may take variable times to fetch or construct web pages as demanded; some of the time any request will be waiting for disk access; many requests for pages may arrive closely spaced in time. In this model it may make sense to create a thread for each request which services (just) that as a sequence of operations whilst other threads do similar things for other requests. The application does not need to care that other threads may want to overtake. | ||

fetch or construct web pages as demanded; some of the time any | |||

request will be waiting for disk access; many requests for pages may | |||

arrive closely spaced in time. In this model it may make sense to | |||

create a thread for each request which services (just) that as a | |||

sequence of operations whilst other threads do similar things for | |||

other requests. The application does not need to care that other | |||

threads may want to overtake. | |||

</blockquote> | </blockquote> | ||

Another advantage of multi-threading is that processors are no longer | Another advantage of multi-threading is that processors are no longer getting much faster but, instead, proliferating in number. A typical workstation “CPU” may have six “[https://en.wikipedia.org/wiki/Multi-core_processor cores]” – complete processors – today (2022) but that number is set to increase. A small number of processors may be employed running completely independent processes but to keep a <em>large</em> number of cores usefully busy is going to require multi-threaded applications. Thus, threading the code will lead to higher performance. | ||

getting much faster but, instead, proliferating in number. A typical | |||

workstation “CPU” may have six “[https://en.wikipedia.org/wiki/Multi-core_processor cores]” – complete processors – today ( | |||

Specialist processors, such as Graphics Processing Units (GPUs), may | Specialist processors, such as Graphics Processing Units (GPUs), may already have 1000+ processors although these may not all be completely independent and share the same thread context. | ||

already have 1000+ processors although these may not all be completely | |||

independent and share the same thread context. | |||

<blockquote> | <blockquote> | ||

Example: some ‘general purpose’ processors are coming to | Example: some ‘general purpose’ processors are coming to rival this scale, partly by relying on independent cores and partly | ||

rival this scale, partly by relying on independent cores and partly | by “[https://en.wikipedia.org/wiki/Hyper-threading hyper-threading]” within a core. For example, the (at time of writing) latest “[http://www.oracle.com/us/products/servers-storage/sparc-m8-processor-ds-3864282.pdf SPARC M8]” (2017) has 32 processor cores, <em>each</em> with up to 8 threads (that’s up to <strong>256 concurrent threads</strong>). | ||

by “[https://en.wikipedia.org/wiki/Hyper-threading hyper-threading]” | |||

within a core. For example, the (at time of writing) latest | |||

“[http://www.oracle.com/us/products/servers-storage/sparc-m8-processor-ds-3864282.pdf SPARC M8]” (2017) has 32 processor cores, <em>each</em> with up to 8 threads (that’s up to <strong>256 concurrent threads</strong>). | |||

</blockquote> | </blockquote> | ||

<blockquote> | <blockquote> | ||

Another example: Intel [https://www.intel.com/content/www/us/en/products/processors/xeon-phi/xeon-phi-processors.html Xeon Phi] | Another example: Intel [https://www.intel.com/content/www/us/en/products/processors/xeon-phi/xeon-phi-processors.html Xeon Phi] | ||

chips includes ~60 processor cores, each capable of supporting four | chips includes ~60 processor cores, each capable of supporting four threads simultaneously. | ||

threads simultaneously. | |||

</blockquote> | </blockquote> | ||

=== Drawbacks === | === Drawbacks === | ||

The biggest drawback to multithreading is that it is more difficult to | The biggest drawback to multithreading is that it is more difficult to program this way. ‘Traditional’ programming tends to be | ||

program this way. ‘Traditional’ programming tends to be | putting operations into a sequence; when operations are deliberately allowed to happen in an unpredictable order people can get confused. This, in turn, can lead to subtle faults, which only evidence intermittently, making debugging difficult. | ||

putting operations into a sequence; when operations are deliberately | |||

allowed to happen in an unpredictable order people can get confused. | |||

This, in turn, can lead to subtle faults, which only evidence | |||

intermittently, making debugging difficult. | |||

It is necessary to ensure that all <strong>dependencies</strong> in the code are | It is necessary to ensure that all <strong>dependencies</strong> in the code are <strong>guaranteed</strong>; i.e. it is <em>certain</em> that one thread has reached a particular point (e.g. the disk has provided the data) before another thread continues past a point of its own. On the other hand two (or more) threads must not act in a way where they will stop each other from proceeding – a condition known as “<strong>[[deadlock]]</strong>”. | ||

<strong>guaranteed</strong>; i.e. it is <em>certain</em> that one thread has reached a | |||

particular point (e.g. the disk has provided the data) before another | |||

thread continues past a point of its own. On the other hand two (or | |||

more) threads must not act in a way where they will stop each other | |||

from proceeding – a condition known as | |||

“<strong>[[deadlock]]</strong>”. | |||

[[Image:thread_race.png|link=|alt=Thread race]] | [[Image:thread_race.png|link=|alt=Thread race]] | ||

These issues have been common in multiprocessing operating systems for | These issues have been common in multiprocessing operating systems for a long time and are becoming increasingly important in applications programming. | ||

a long time and are becoming increasingly important in applications | |||

programming. | |||

<blockquote> | <blockquote> | ||

That, in itself, is a Good Reason for studying this topic. | That, in itself, is a Good Reason for studying this topic. | ||

</blockquote> | </blockquote> | ||

---- | ---- | ||

{{BookChapter|4|159-197}} | |||

{{PageGraph}} | {{PageGraph}} | ||

{{Category|Deadlock}} | {{Category|Deadlock}} | ||

{{Category|Processes}} | {{Category|Processes}} | ||

Latest revision as of 13:12, 5 April 2022

| On path: Processes | 1: Processes • 2: Context • 3: Process Control Block (PCB) • 4: Multi Threading • 5: Threads • 6: Interprocess Communication • 7: Process Scheduling • 8: Scheduler • 9: Process States • 10: Process Priority |

|---|

| Depends on | Processes |

|---|

“Multi-threading” is the term loosely applied both to running multiple threads and multiple processes on a single machine. However it is most usually used when referring to threads which belong to the same program and which cooperate closely; this is more likely to occur at the thread level (within the same process) for simplicity in communication – e.g. using shared variables.

Benefits

Multi-threaded code can offer some significant advantages to the programmer, not least in enabling simpler source code. It can be easier to decompose a problem into semi-independent units which operate in their own way in their own time than to try to do all the required jobs with a single slab of code. This is often because the timing of functions is unpredictable so writing them in a simple sequence may not match the most efficient order in which they could be scheduled.

Such parallel code, with unpredictable timing, is sometimes referred to as “asynchronous” code. Written as multiple threads, it becomes the thread scheduler’s job to keep the processor(s) busy.

Example: consider a web-server which may take variable times to fetch or construct web pages as demanded; some of the time any request will be waiting for disk access; many requests for pages may arrive closely spaced in time. In this model it may make sense to create a thread for each request which services (just) that as a sequence of operations whilst other threads do similar things for other requests. The application does not need to care that other threads may want to overtake.

Another advantage of multi-threading is that processors are no longer getting much faster but, instead, proliferating in number. A typical workstation “CPU” may have six “cores” – complete processors – today (2022) but that number is set to increase. A small number of processors may be employed running completely independent processes but to keep a large number of cores usefully busy is going to require multi-threaded applications. Thus, threading the code will lead to higher performance.

Specialist processors, such as Graphics Processing Units (GPUs), may already have 1000+ processors although these may not all be completely independent and share the same thread context.

Example: some ‘general purpose’ processors are coming to rival this scale, partly by relying on independent cores and partly by “hyper-threading” within a core. For example, the (at time of writing) latest “SPARC M8” (2017) has 32 processor cores, each with up to 8 threads (that’s up to 256 concurrent threads).

Another example: Intel Xeon Phi chips includes ~60 processor cores, each capable of supporting four threads simultaneously.

Drawbacks

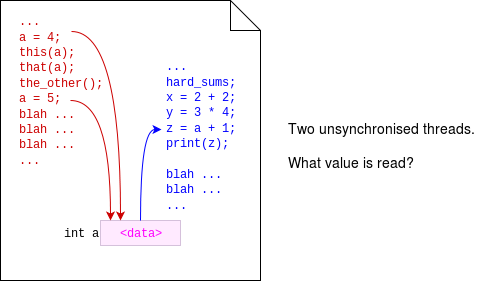

The biggest drawback to multithreading is that it is more difficult to program this way. ‘Traditional’ programming tends to be putting operations into a sequence; when operations are deliberately allowed to happen in an unpredictable order people can get confused. This, in turn, can lead to subtle faults, which only evidence intermittently, making debugging difficult.

It is necessary to ensure that all dependencies in the code are guaranteed; i.e. it is certain that one thread has reached a particular point (e.g. the disk has provided the data) before another thread continues past a point of its own. On the other hand two (or more) threads must not act in a way where they will stop each other from proceeding – a condition known as “deadlock”.

These issues have been common in multiprocessing operating systems for a long time and are becoming increasingly important in applications programming.

That, in itself, is a Good Reason for studying this topic.

| Also refer to: | Operating System Concepts, 10th Edition: Chapter 4, pages 159-197 |

|---|