Buffer Overflow: Difference between revisions

m 1 revision imported |

m 1 revision imported |

||

| (One intermediate revision by one other user not shown) | |||

| Line 2: | Line 2: | ||

-->{{#invoke:Dependencies|add|Security,4|Memory,2}} | -->{{#invoke:Dependencies|add|Security,4|Memory,2}} | ||

<blockquote> | <blockquote> | ||

The buffer overflow vulnerability is a [[Security | security]] issue | The buffer overflow vulnerability is a [[Security|security]] issue worthy of particular mention because it is both a | ||

worthy of particular mention because it is both a | ‘classic’ attack and it illustrates several general principles, and some possible defences. | ||

‘classic’ attack and it illustrates several general | |||

principles, and some possible defences. | |||

</blockquote> | </blockquote> | ||

The boundary of a software structure, such as an array, will not be | The boundary of a software structure, such as an array, will not be checked in hardware; it is too expensive to provide hardware checking on a word-by-word basis and hardware usually relies on [[Memory_Pages | page]]-level checks. Testing array limits in software is also (time) expensive; it may be done as part of the software environment (e.g. Java) or it may be left to the programmer (e.g. C). Programmers <em>sometimes</em> (make that “usually”) omit the checks because they ‘know’ the index is within the limits. | ||

checked in hardware; it is too expensive to provide hardware checking | |||

on a word-by-word basis and hardware usually relies on | |||

[[Memory_Pages | page]]-level checks. Testing array limits in software | |||

is also (time) expensive; it may be done as part of the software | |||

environment (e.g. Java) or it may be left to the programmer (e.g. C). | |||

Programmers <em>sometimes</em> (make that “usually”) omit the | |||

checks because they ‘know’ the index is within the limits. | |||

A classic security flaw is when this is a false assumption and an | A classic security flaw is when this is a false assumption and an attacker can input more bytes than an allocated space will hold. The space is typically an input <strong>buffer</strong> and the input can <strong>overflow</strong> the area, unchecked. | ||

attacker can input more bytes than an allocated space will hold. The | |||

space is typically an input <strong>buffer</strong> and the input can <strong>overflow</strong> | |||

the area, unchecked. | |||

<blockquote> | <blockquote> | ||

A ‘classic’ example is the C language library <code>gets()</code> | A ‘classic’ example is the C language library <code>gets()</code> which fetches bytes from the <code>stdin</code> stream until the next ‘newline’ (or <code>EOF</code>). It is impossible to tell in advance how many characters (bytes) will be fetched so it is | ||

which fetches bytes from the <code>stdin</code> stream until the next | impossible to guarantee a big enough buffer. One infamous ‘hack’ in the past involved an exploitation of the Unix | ||

‘newline’ (or <code>EOF</code>). It is impossible to tell in | <strong>finger daemon</strong> which used this library call. <strong>Never use <code>gets()</code>.</strong> The problem is addressed by <code>fgets()</code> which has a similar function | ||

advance how many characters (bytes) will be fetched so it is | |||

impossible to guarantee a big enough buffer. One infamous | |||

‘hack’ in the past involved an exploitation of the Unix | |||

<strong>finger daemon</strong> which used this library call. | |||

<strong>Never use <code>gets()</code>.</strong> | |||

The problem is addressed by <code>fgets()</code> which has a similar function | |||

but includes an argument to limit the size. Thus | but includes an argument to limit the size. Thus | ||

<code>fgets(p_buffer, buffer_size, stdin);</code> provides a safe alternative. | <code>fgets(p_buffer, buffer_size, stdin);</code> provides a safe alternative. | ||

</blockquote> | </blockquote> | ||

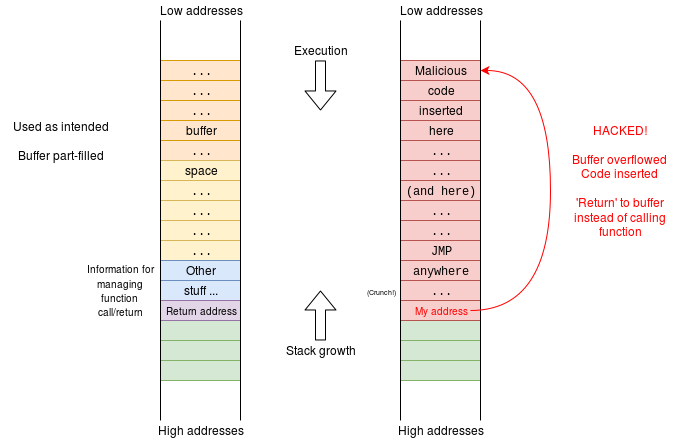

The figure below shows a possible attack. The attacker knows the size | The figure below shows a possible attack. The attacker knows the size of the buffer and something about the calling convention of the compiler used. By carefully overfilling the buffer the function return address can be overwritten with something else, causing the current function to ‘return’ to the ‘wrong’ place when it has completed. If the address of the stack is also known, this ‘wrong’ place could be within the buffer itself – which the attacker has loaded with new <strong>code</strong>. The attacker now | ||

of the buffer and something about the calling convention of the | |||

compiler used. By carefully overfilling the buffer the function | |||

return address can be overwritten with something else, causing the | |||

current function to ‘return’ to the ‘wrong’ | |||

place when it has completed. If the address of the stack is also | |||

known, this ‘wrong’ place could be within the buffer itself | |||

– which the attacker has loaded with new <strong>code</strong>. The attacker now | |||

has control of this process. | has control of this process. | ||

If this vulnerability is within a [[System_Calls | system call]] the | If this vulnerability is within a [[System_Calls | system call]] the attacker will have full O.S. [[Processor_Privilege | privilege]]. This is a Bad Thing. | ||

attacker will have full O.S. [[Processor_Privilege | privilege]]. This | |||

is a Bad Thing. | |||

[[Image:buffer_overflow.png|link=|alt=Buffer Overflow]] | [[Image:buffer_overflow.png|link=|alt=Buffer Overflow]] | ||

| Line 56: | Line 29: | ||

*Make the address to jump to (“My address”) impossible to predict. See ASLR: notice that “My address” was a specific value supplied by the attacker. | *Make the address to jump to (“My address”) impossible to predict. See ASLR: notice that “My address” was a specific value supplied by the attacker. | ||

An overflow attack may not even need to run code. If the “Other | An overflow attack may not even need to run code. If the “Other stuff…” in the figure above contains variables (it almost | ||

stuff…” in the figure above contains variables (it almost | certainly does!) then it may be possible to change something there which will (for example) change the outcome of: | ||

certainly does!) then it may be possible to change something there | |||

which will (for example) change the outcome of: | |||

<syntaxhighlight lang="C"> | <syntaxhighlight lang="C"> | ||

if (operation == allowed) ... | if (operation == allowed) ... | ||

| Line 65: | Line 36: | ||

from <code>false</code> to <code>true</code>. | from <code>false</code> to <code>true</code>. | ||

---- | ---- | ||

Given that buffer overflows have been understood for decades, one might expect them not to occur any more. However, that is not the case. In fact, buffer overflows are still outright common - and one day, even you, the reader, might write code that's vulnerable to it at work. | |||

expect them not to occur any more. | |||

Here’s an example from | Here’s an example from [https://www.bbc.co.uk/news/technology-48262681 WhatsApp] from 2019 … | ||

[https://www.bbc.co.uk/news/technology-48262681 WhatsApp] from 2019 … | Or for the gamers among us, the [http://misc.ktemkin.com/fusee_gelee_nvidia.pdf Fusée Gelée] exploit for the Nintendo Switch (and other Tegra X1 devices) also makes use of buffer overflow. | ||

---- | ---- | ||

| Line 125: | Line 95: | ||

privileges it can then make other ‘holes’ in the defences. | privileges it can then make other ‘holes’ in the defences. | ||

---- | ---- | ||

{{BookChapter|16.2.2|628-631}} | |||

{{PageGraph}} | {{PageGraph}} | ||

{{Category|Security}} | {{Category|Security}} | ||

{{Category|User}} | {{Category|User}} | ||

Latest revision as of 10:02, 5 August 2019

| Depends on | Security • Memory |

|---|

The buffer overflow vulnerability is a security issue worthy of particular mention because it is both a ‘classic’ attack and it illustrates several general principles, and some possible defences.

The boundary of a software structure, such as an array, will not be checked in hardware; it is too expensive to provide hardware checking on a word-by-word basis and hardware usually relies on page-level checks. Testing array limits in software is also (time) expensive; it may be done as part of the software environment (e.g. Java) or it may be left to the programmer (e.g. C). Programmers sometimes (make that “usually”) omit the checks because they ‘know’ the index is within the limits.

A classic security flaw is when this is a false assumption and an attacker can input more bytes than an allocated space will hold. The space is typically an input buffer and the input can overflow the area, unchecked.

A ‘classic’ example is the C language library

gets()which fetches bytes from thestdinstream until the next ‘newline’ (orEOF). It is impossible to tell in advance how many characters (bytes) will be fetched so it is impossible to guarantee a big enough buffer. One infamous ‘hack’ in the past involved an exploitation of the Unix finger daemon which used this library call. Never usegets(). The problem is addressed byfgets()which has a similar function but includes an argument to limit the size. Thusfgets(p_buffer, buffer_size, stdin);provides a safe alternative.

The figure below shows a possible attack. The attacker knows the size of the buffer and something about the calling convention of the compiler used. By carefully overfilling the buffer the function return address can be overwritten with something else, causing the current function to ‘return’ to the ‘wrong’ place when it has completed. If the address of the stack is also known, this ‘wrong’ place could be within the buffer itself – which the attacker has loaded with new code. The attacker now has control of this process.

If this vulnerability is within a system call the attacker will have full O.S. privilege. This is a Bad Thing.

There are defenses:

- Don’t write code assuming that inputs will always be legal.

- Use a “canary”. This is an extra, random value placed amongst the “Other stuff…” by the compiler. By checking this before the function return it is likely that the intrusion will be detected before the malicious code can be executed.

- Make the target stack space non-executable. Modern memory protection can allow the MMU to distinguish between a data read and an instruction fetch; forbidding the latter on the stack segment (which must have data read and write access) would cause a segmentation fault at “My address” in the above example.

- Make the address to jump to (“My address”) impossible to predict. See ASLR: notice that “My address” was a specific value supplied by the attacker.

An overflow attack may not even need to run code. If the “Other stuff…” in the figure above contains variables (it almost certainly does!) then it may be possible to change something there which will (for example) change the outcome of:

if (operation == allowed) ...

from false to true.

Given that buffer overflows have been understood for decades, one might expect them not to occur any more. However, that is not the case. In fact, buffer overflows are still outright common - and one day, even you, the reader, might write code that's vulnerable to it at work.

Here’s an example from WhatsApp from 2019 … Or for the gamers among us, the Fusée Gelée exploit for the Nintendo Switch (and other Tegra X1 devices) also makes use of buffer overflow.

Address Space Layout Randomisation (ASLR)

Rather than loading code and data at fixed (and therefore predictable) addresses some systems will put these at different (hard to predict) places, different for each instance of execution. This makes it considerably harder for an attacker (which may be another program) to find a critical target to force an attack.

ASLR is supported by many modern operating systems. It can makes attack considerably more difficult by making targets harder to localise; it does not prevent attacks.

Does your computer so this? Try the following simple variant on an early exercise:

// Do I use ASLR?

#include <stdlib.h> // Contains: exit()

#include <stdio.h> // Contains: printf()

int global; // Globally scoped variable

main (int argc, char *argv[]) // The 'root' program; execution start

{

int local; // Variable local to 'main'

printf(" Code at %016X\n", &main);

printf("Global variable at %016X\n", &global);

printf(" Local variable at %016X\n", &local);

exit(0);

}

Running that will indicate the (virtual) addresses of the code, static data (global variables) and stack (local variables) of the process. Running it again may reveal that some of these change from run to run. If any, the most likely to change is the last, because the stack is typically a mixture of data and code pointers (mostly return addresses). If an attack can change a code pointer it may be able to divert execution to its own code. To do this successfully it also needs to where that code is. If this is always the same for some set of similarly configured computers, an attacker an determine the target statically, in advance. If the target is selected dynamically (at run-time) it is harder to hit!

Notice that the vulnerability derives from an error in the original application. A mistake in an application – such as not checking array bounds – might lead to a take-over of that process. This could then compromise anything the legitimate owner of the process could. This is not the fault of the operating system design although the O.S. may act to make a vulnerability harder to exploit.

Of course, if such code is running with ‘ root’ privileges it can then make other ‘holes’ in the defences.

| Also refer to: | Operating System Concepts, 10th Edition: Chapter 16.2.2, pages 628-631 |

|---|