Threads: Difference between revisions

pc>Yuron No edit summary |

m 1 revision imported |

(No difference)

| |

Latest revision as of 10:03, 5 August 2019

| On path: Processes | 1: Processes • 2: Context • 3: Process Control Block (PCB) • 4: Multi Threading • 5: Threads • 6: Interprocess Communication • 7: Process Scheduling • 8: Scheduler • 9: Process States • 10: Process Priority |

|---|

| Depends on | Processes • Multi Threading |

|---|

A thread of execution is a single, sequential series of machine instructions (or lines of code if you prefer to think at a more abstract level).

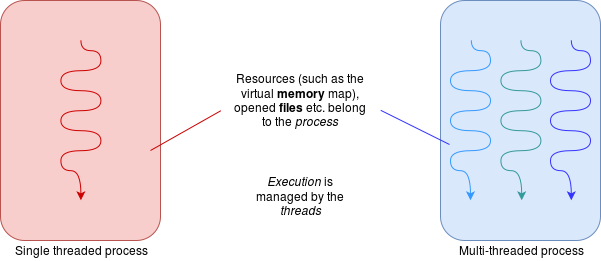

Threads are basically similar to processes (and are sometimes called “lightweight processes”). Threads belong within processes. The simple model is to have one thread in each process (in which case the term “thread” becomes redundant) but any process could have multiple threads. Threads share most of their context although they will each have some private data space such as processor registers and their own stack.

Differences between processes and threads:

- threads within the same process share access to resources such as peripheral devices and files.

- threads within the same process share a virtual memory map.

- switching threads within the same process is considerably cheaper (faster) than switching processes because there is (much) less context to switch.

Perhaps unsurprisingly, it is also faster to create or destroy a thread than to create or destroy a process.

Thread management

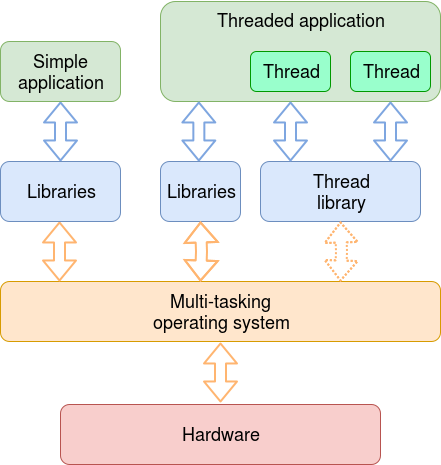

Threads may be managed by the operating system scheduler or, at user level, by a separate scheduler in the application. (Or both!) In Java (and possibly elsewhere) threads scheduled within the application (JVM) are referred to as “green threads”; those in the operating system (when available) are “native threads”.

Just like processes, each thread has its own state: {running, ready or blocked}.

Thread management by a user typically allows faster thread switching (no need for (slow) system calls) and the scheduling can be tailored to the application, with more predictable behaviour. On the other hand these probably need to use cooperative scheduling which relies on all the threads being ‘well behaved’; for example if one thread makes a system call which blocks, all the threads (in that process) will be blocked, even if others could (in principle) continue; thus there might be an unnecessary (and expensive) process context switch.

A potential disadvantage in managing threads at user-level is that it requires its own management software for creating, tracking, switching, synchronising, destroying threads. This may be in the form of an imported library.

Thread management by the operating system allows threads to be scheduled in a similar fashion to any process. Context switching (within the same process) can be cheaper than process switching but is still likely to be more expensive than leaving it to the application.

If you want to look at some pros and cons of the different strategies, have a look at the literature such as this or this.

Exercise

There is an exercise to gain some familiarity with Java threads.

Examples

- Java supports threading as part of the language. Indeed – behind the scenes – it probably relies on it in the “system”, for example. In a simple system these may be scheduled by the Java Virtual Machine (JVM) software; more contemporary systems probably get help from the OS too. Here’s (yet) another article which may help explain this.

- Pthreads (POSIX threads) provide a standardised, language independent thread model for Unix(-like) systems.

High performance hardware may support threads directly.

Partly this is a pragmatic attempt to harness more processing from the processor. One approach is to be able to thread-switch very quickly, making it worthwhile when one thread is blocked for even a short time (such as a cache miss). This will require extra registers to hold the appropriate threads’ context. Another approach is to have hardware which runs multiple threads genuinely concurrently (“Simultaneous Multithreading” (SMT)). “Hyper-Threading” is an Intel term for one version of this, of which you may have heard mention.

In such cases the operating system will need to become involved in the thread scheduling.

| Also refer to: | Operating System Concepts, 10th Edition: Chapter 4, pages 160-197 |

|---|