Direct Memory Access (DMA)

| On path: OS Topics | 1: Concepts • 2: Cache • 3: Context • 4: Processor Privilege • 5: Process Scheduling • 6: Exceptions • 7: Interrupts • 8: Direct Memory Access (DMA) • 9: Virtualisation • 10: Hypervisor |

|---|

| Depends on | Using Peripherals • Memory |

|---|

Some ‘computing’ processes are simple and tediously repetitive, especially some concerned with input and output. An example would be loading blocks of data into memory from a magnetic disk.

A ‘general purpose’ CPU can do this but:

- it may spend many, perhaps quite short, idle intervals waiting for ‘the next byte’ - which is inefficient.

- it is not optimised for this type of operation; the energy-efficiency is fairly poor.

- it could be ‘better’ employed doing more complex ‘computing’.

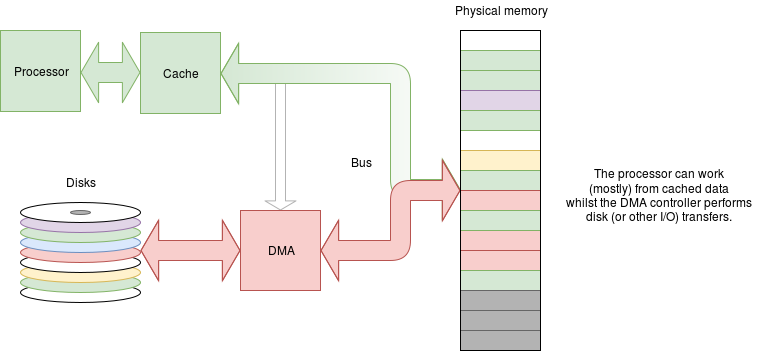

A common way to support such operations is to include a hardware DMA controller in the system. This is a simple ‘processor’ typically capable of moving data from place to place and counting how much it has done … and that’s about it.

A DMA transfer is set up by the CPU which may say something like:

Source: disk input device data Destination: memory from address 0x0012_3400 Length: 4 KiB

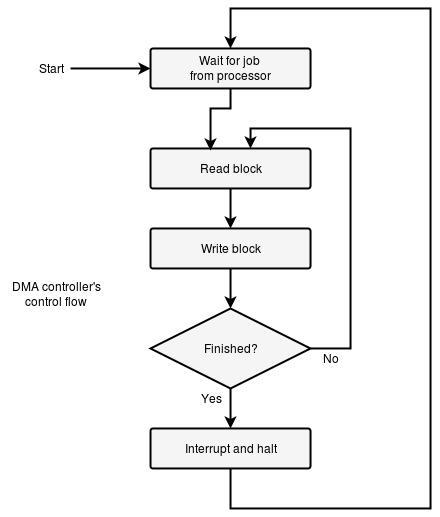

and the DMA will then liaise with the input device.

for (length)

wait for data

load data from I/O

store data in memory

increment memory pointer

interrupt

The interrupt may be used to set up another data transfer.

Each transfer may take a considerable time, during which the processor can do something else.

Example: during paging it would be normal for the page manager (evoked by a memory fault in one process) to set up a DMA transfer, then wait for the appropriate interrupt, causing a context switch. The paging process will be blocked until the DMA (may be one or more transfers needed) signals completeness. The DMA is a concurrent process, although not running as software on a CPU core.

The same mechanism would be used to support data transfers between files and their buffers.

It is important to note that:

- the DMA controller is outside any MMU and is working on physical addresses

- any physical pages scheduled for DMA transfers must be pinned so that they are not re-used until the transfer is complete (and then for the purpose intended!)

Real DMA controllers

A typical DMA controller can at least move data in either direction between memory and I/O devices. Different ‘channels’ can be provided, allowing multiple different DMA processes to run concurrently.

DMA operations may interfere slightly with the processor(s) as they compete for memory access. However I/O DMA spends quite a lot of time waiting so its requirements may not be great, plus the processor(s) may have caches, thus rarely needing the memory bus anyway.

Common applications

- Large (block) I/O transfers

- High bandwidth I/O transfers

Memory-to-memory DMA

Some (slightly more sophisticated) DMA controllers will perform block copies too. Whilst generally useful, this is not as applicable to operating systems than the ability to move I/O data ‘in the background’.

Common applications

- Large (block) memory copies

- Graphics (historically, i.e. blitters)

- Now largely superseded by more sophisticated GPUs which have autonomous parallel access to the memory.

| Also refer to: | Operating System Concepts, 10th Edition: Chapter 12.2.4, pages 498-500 |

|---|