Memory Mapping Extra

| Depends on | Memory Mapping |

|---|

The basic principle of memory mapping should already have been explored before starting this article.

Two-level Page Tables

Imagine we choose 4 KiB as a reasonable page size: it is. This requires 12 bits as an offset, leaving 32 - 12 = 20 bits for the page address. This means that there are 220 pages which means ‘a lot’ of entries in the page table. Very few applications really need memory spread across all those pages and all those entries take up valuable space. So, often, the page tables are subdivided into a tree structure; only branches which are used are extended further.

With a fully expanded table this will take up (slightly) more space than a single-level table. However with a real application the savings are significant.

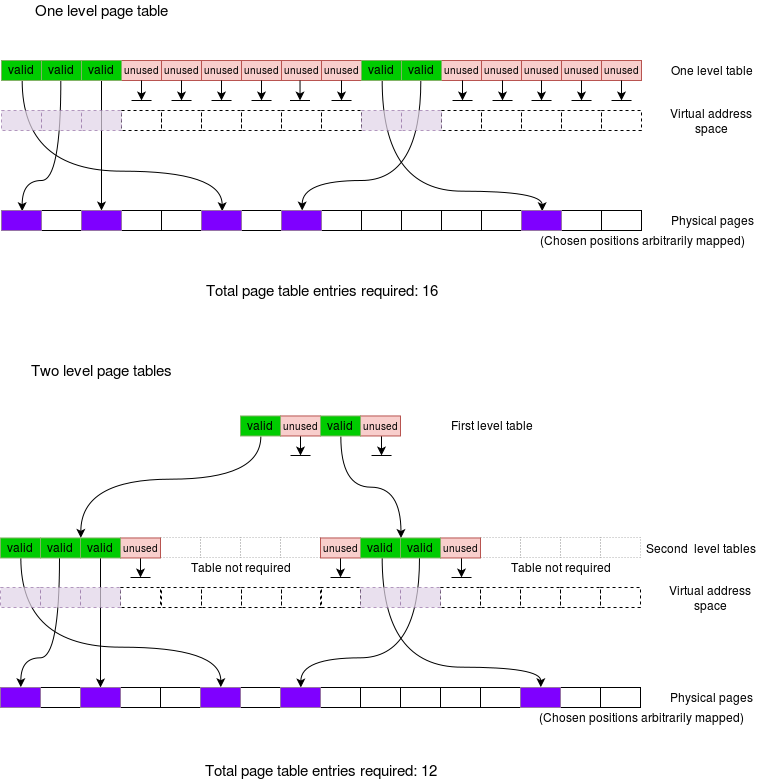

This figure shows the principle. Implicitly here there could be 64 pages. With a single level page look up this would always use 64 words. These tables use 32 words (8 in the first level, 3 x 8 = 24 in the second level) to map a ‘more realistic’ process.

This process has two logical segments, one with 10 pages and one with 5 pages. Simplistically, these could represent ‘code’ and ‘data’.

The application has them distributed so they don’t pack ‘neatly’ together, in this case. Even so most of the potential second level tables are not needed and the space is saved.

Note that each potential table is either present (in full) or not needed.

A fully expanded two-level scheme would be more expensive than a single level scheme. However it would be unusual to want this.

Here’s another picture to show how savings are made. This model only has 16 possible pages, of which 5 are used; a real map may have millions of possible pages on a 32-bit system (and, literally quadrillions in a 64-bit machine) of which tens or hundreds will typically be used. The savings are thus the only way to be practical.

An important principle is that, if nothing is wanted in a branch of a tree then that branch need not be expanded further. The same principle applies, for example, in i-nodes in a Unix file system.

Interactive demonstration

Here is a model which illustrates the principle, in action. So that it fits in a practical view, this is another 8-bit model, with 2 bits used for both the first- and second-level offsets (so 16 (= 22+2) pages of 16 bytes each, this time.)

Virtual Address in binary (8 bits)

Virtual Address = L1 PageTable index (2 bits) + L2 PageTable index (2 bits) + Offset (4 bits)

Physical Address in binary (8 bits)

This mechanism – provided by the computer hardware – will allow any virtual page to be mapped onto any physical page. The entries in the translation table are set up by software – i.e. the operating system when it is present.

How it works

- The most significant (in this case) 2 bits index into the first level table.

- If there is a valid entry, the next most significant (in this case) 2 bits index into the indicated second level table.

- The second level table provides the page frame (in this case 4 bits) which is concatenated with the remaining bits of the virtual address.

The first-level page table is always needed, but any second-level tables which are not used do not need to be created (thus occupy no memory!). Practically, the savings are considerable.

Any ‘extra’ information (‘?’) is only required in the second-level tables, with one entry per page frame.

Here is a more practical example.

Three-level Page Tables … and beyond

With the advent of 64-bit address spaces the number of potential pages has increased enormously. Despite the best efforts of the software department a lot of this space is still not used! To map these pages economically the above concept can be extended beyond two levels of look up.

Four levels are used in the Intel x86-64 architecture. In some newer ones, even five levels.

You should be able to visualise this from the principles above, without ever more complex drawings here.

| Also refer to: | Operating System Concepts, 10th Edition: Chapter 9.4.1, pages 371-373 |

|---|