Synchronisation: Difference between revisions

pc>Yuron No edit summary |

m (1 revision imported) |

(No difference)

| |

Revision as of 12:46, 26 July 2019

| On path: IPC | 1: Processes • 2: Interprocess Communication • 3: Shared Memory • 4: Files • 5: Unix Signals • 6: Pipes • 7: Sockets • 8: Synchronisation • 9: Synchronisation Barrier • 10: Atomicity • 11: Mutual exclusion • 12: Signal and Wait |

|---|

| Depends on | Interprocess Communication • Operation Ordering |

|---|

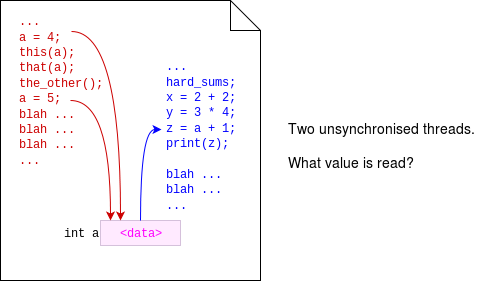

Once any set of threads or processes – the problem is similar at any level – are working on the same data it typically becomes important to ensure some partial ordering of their execution. Although (perhaps, assuming a single processor) only one thread is executed at once it is not usually predictable (i.e. “non-deterministic”) which order they will be scheduled. This is often referred to as “asynchronous” execution. (With more processors there can be more concurrent execution: this makes no difference to the problem.)

Whilst threads/processes are doing independent things to their own data this does not matter. When they interact, it can. This is a “race condition”.

Note that, if code is non-deterministic it could get different answers each time it is executed. This is not a Good Thing and, in many cases, it is a distinctly Bad Thing.

Thus there is a need for synchronisation of processes and threads. This can be done in a variety of ways, such as:

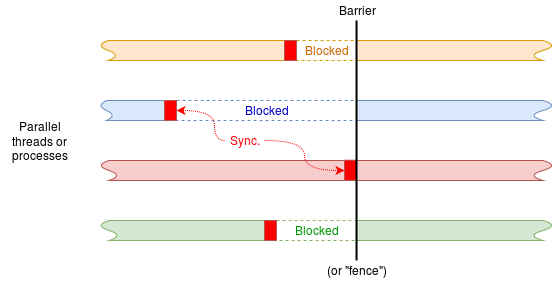

- A barrier: where a predetermined number of threads become blocked until frees by the last one to arrive.

In principle this is simple to implement – each thread decrements a counter and blocks unless the count has reached zero. If the count has reached zero all the other threads are signalled to unblock.

There is an animated demonstration of this.

In practice it is a little more difficult since each decrement operation itself must be atomic.

- Thread join: two (or more) threads must ‘join’ before proceeding as a single thread (possibly forking again). This is a more expensive barrier.

- Mutual exclusion can be used to provide atomicity on a section of code. Synchronisation is enforced in accesses to the protected structure(s) but the order of threads gaining access may be non-deterministic.

- Message passing (asynchronous): bypass the problem by ‘sending’ the appropriate information as a snapshot before proceeding. Whilst the data in a message (in one direction) can be guaranteed at the time of transmission the transmitter can be allowed to continue – maybe reaching the point where it can send the next message too. A drawback with this is that a short/fast thread may try to send messages more frequently than its correspondent can read; there is thus still some need for scheduling control.

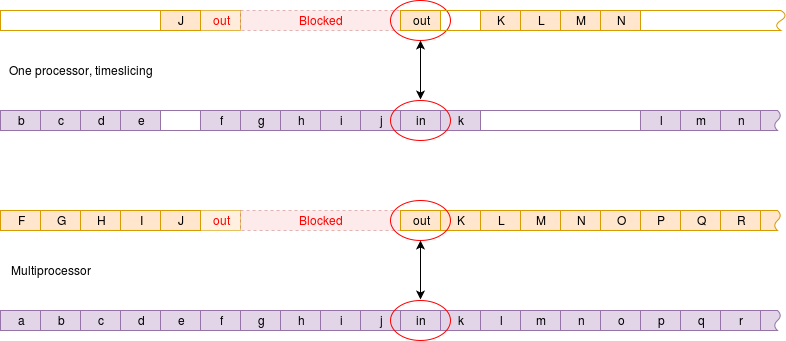

This probably doesn’t really ‘count’ as “synchronisation”... - Message passing (synchronous): a message sender or message receiver – whichever reaches the relevant point first – blocks and waits for its counterpart to reach the corresponding step. At this point information is exchanged (this can be a two-way exchange if appropriate) and the threads are freed to continue. (If scheduling on a single processor it is likely that the second arriving thread will continue first to avoid the overhead of a thread switch.)

A drawback with this strategy is the overhead – and possible inefficiency – resulting from the blocking & scheduling.

The synchronisation is most obvious in the multiprocessor view, where there is true parallelism.

Note that, while operating systems typically need to employ (at least some of) these techniques for correct operation they can also be needed in multi-threaded applications code.

Don’t forget ‘threads’ outside the processor(s)

When synchronising threads it is important to remember that ‘threads’ may not just be running on a ‘processor’. A good example is a DMA transfer – a ‘background’ movement of data, for example being fetched from a file on a disk. A thread on a processor may initiate the transfer … and then do something else; it is essential that no thread uses the data until it is certain that it has been transferred!

These days, there may be other hardware running semi-autonomously; a GPU is a good example.