Fragmentation

| Depends on | Filing System Implementation |

|---|

Some computer resources are indivisible items (e.g. keyboard); some can be timeshared (e.g. processor); some can be ‘physically’ divided to satisfy different requirements at the same time.

(Okay – not literally “physically”…)

In this latter category, two important resources are:

- memory (i.e. RAM)

- (magnetic) disk filestore

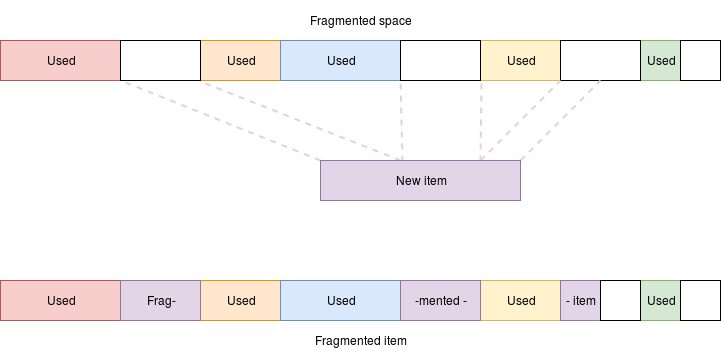

In both these cases there is some concept of an ‘address space’ (typically bytes in memory, blocks on disk) which is one dimensional. In both cases it is possible to imagine scenarios where some required amount of a resource is available, but there are already parts allocated which mean there is no single, available ‘hole’ which will accommodate a new request.

This typically results from different sized items being allocated and some of them later being removed.

Schemes exist in both these examples to overcome some of the resultant difficulties. In some cases these can result in fragmentation, where one big ‘thing’ is subdivided into more, smaller ‘things’.

Memory

In a virtual memory system the physical RAM can be remapped arbitrarily at the page level.

However, within a process’ virtual space, dynamic allocation and deallocation can fragment the memory at an arbitrary scale.

Too much fragmentation can result in a failure to allocate some desired space, even if the space is, in principle, available because there is no single large-enough area.

Filestore

It is usual for a filing system to allocate space on a block-by-block basis and these can be mapped arbitrarily. (An illustration can be seen in the FAT description.)

All the disk blocks can be used, but if they become dispersed it can slow down access. This is because (unlike RAM) the disk is not truly ‘random access’ and will normally be formatted such that, having read block 1205 (say) the most accessible block will be 1206.

Defragmentation

Because fragmentation causes inefficiency, at least, effort may be put into defragmentation.

Memory

When virtual memory is freed it can be worth the (extra) effort to try

to amalgamate the free block(s) with the existing free space. This

can be done as part of the free() process (at an immediate cost) or

as a background task. Garbage collectors will probably do this, for

example.

Filestore

This can take significant effort. Periodic, offline defragmentation may be done as trying to move file blocks whilst they may be in use can prove tricky.

There’s a little animated demonstration on Wikipedia.

A common countermeasure is to partition the disk such that files such as system software – which tend to be read-only – are kept in an undisturbed (tidy?!) state whereas user files – which change a lot – are kept in a different area. The frequently used utilities will then load at peak speeds.

Another example?

Historically, memory segments sometimes had to be contiguous areas of physical (as well as virtual) address space. Segments are, of course, different sizes … just like files.

Most modern systems will support segmentation using pages so they can divide a segment into scattered blocks. In this case, fragmentation is not a penalty because RAM access is more truly ‘random’; i.e. the performance is independent of the address.